Salesforce Performance - Some Thoughts

Late last year, I was interviewed by Xi Xiao on his Salesforce Way podcast about Salesforce Performance, a topic I have spent a lot of time thinking about over the years I have been working with Salesforce. You can listen to the episode now on Xi’s website and I wanted to share some more thoughts around the topic.

Back end vs Front end

As Xi mentioned during the conversation we can think of separating our performance concerns into the back end and the front end. For the front end our concern is put simply as “how quickly can we make the page render”. There are a number of things that can impact this and some key ares we should look into are as follows:

- How much data are we sending back and forth? Do we need all this data?

- Are there any blocking or long running functions on the page? If so can we improve or remove them?

- How many requests are there to the server? Can we reduce this somehow? Certain things will be outside of our control, for example the end user’s internet speed. But by adopting the correct mindset we can try and ensure we mitigate that as much as possible.

For the back end of our Salesforce applications we have a number of considerations to make, some of which are not directly “code performance” related. Salesforce offers a wide variety of declarative tools leading to the common discussion of clicks vs code for a solution.

From a running speed perspective, code should always be faster. Put simply this is because visual flow, process builder, workflow etc are all just abstractions of programming. The lower down the abstraction chain you are, the faster you will be. However this is where we have to consider “developer performance” - how quick is the solution to deliver and how easy is it to maintain? For a simple solution updating a single field, doing that declaratively is going to be the best way to go, however as data volumes and complexity increase, code becomes the far superior option. This is all about tradeoffs and knowing when to use the different tools, but can impact the performance of your solution.

It is also worth remembering that your back end performance will often impact your front end performance. If you have a button on a page or component that is performing some task inefficiently, this will slow down the response to the front end and hamper the user’s experience. The two parts are very closely connected and should often be viewed holistically as well as independently.

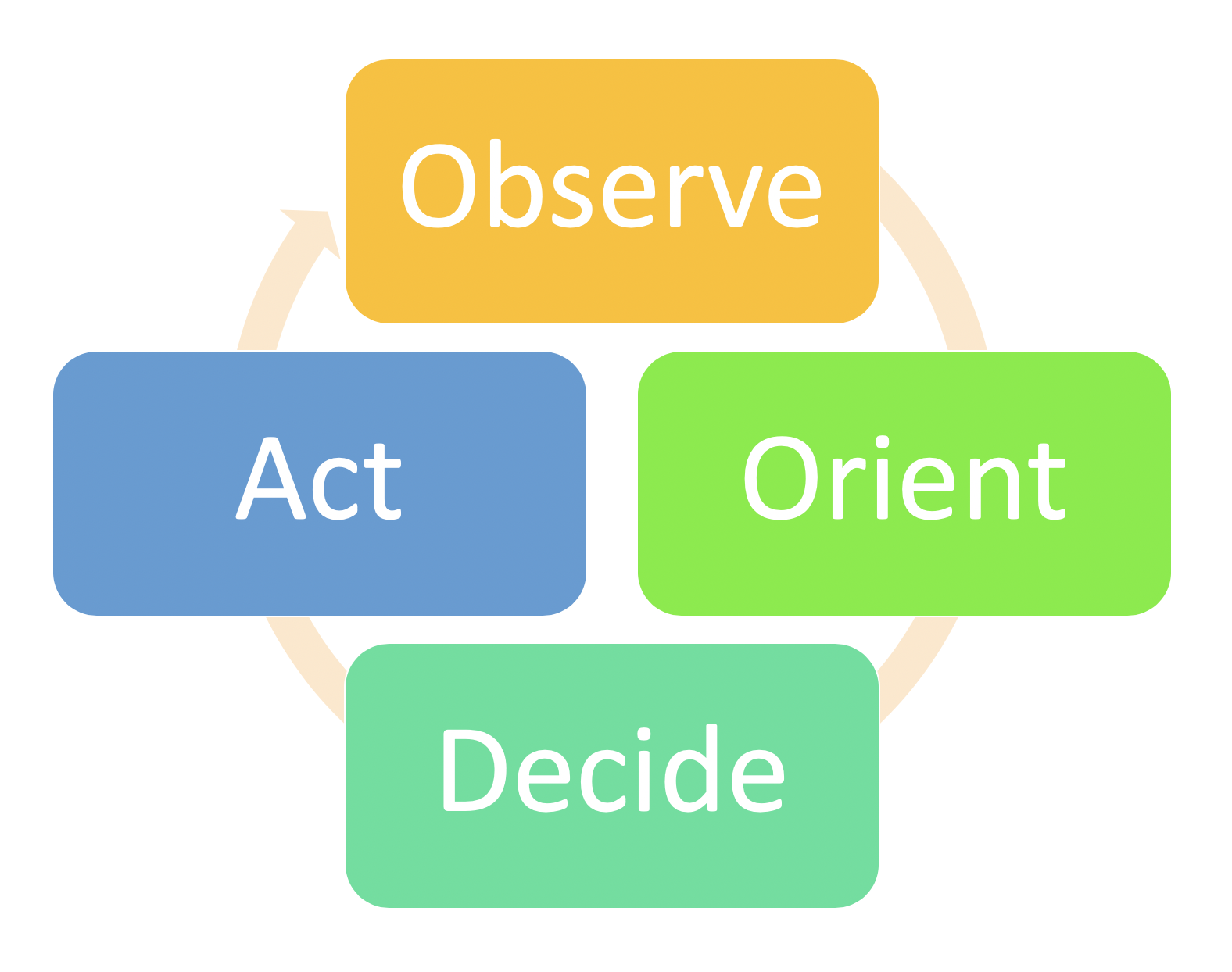

##The OODA Method Know that we know what to consider, how do we go about actually measuring performance and making improvements? As I mentioned in the interview, I am a fan of the OODA method or OODA loop, a system devised by military strategist John Boyd.

The OODA loop has 4 key stages - Observe, Orient, Decide, Act. Lets look at what each of these stages means for us.

Observe

This is where we measure our system using things like the Chrome Developer Tools, Limits statements and debug information. We retrieve a set of measurements and data that are observations of how our system is operating in different circumstances.

Orient

Once we have this data we use it to build up a mental model of how the system is actually operating and what we anticipate happening in the future. This is the key step, taking the data and making a useful model of the situation with it.

Decide

We now decide what to do - if anything - about the information we now have. It is perfectly reasonable to decide here to observe further, however, it is worth remembering that more data will not always help.

Act

If we have decided to make changes to the solution, we make them and return to observing to see if they have had the desired effect.

I personally like this model because it feels natural to me - for most developers/programmers out there this is the way they work. Make some code, test that it works, see what is happening and then update and repeat. Using such a framework can also be helpful in formalising the process to get greater buy-in on technical debt reduction and updates.

When Things Go Wrong

I want to wrap up talking about something that I mentioned on the podcast which really lies at the heart of why this is important. When I am training new developers on Salesforce this is also a point I labour when discussing testing.

Things never go wrong at a good time

In all the years I have been working in software, nothing has ever gone wrong at a “good time”. It has never been at the start of a sprint as we are looking at that area. It has almost certainly always been during a go-live, data deployment or surge.

I was recently working with a retail client for whom Black Friday is unsurprisingly a major event. We had tested and retested the solution a million times for them to ensure nothing went wrong on Black Friday as it was the time when volumes would suddenly ratchet up. If things went wrong then, it would have been game over, but thankfully we had worked hard to anticipate such issues and avoid anything going wrong.

It is during these busy times that the system will be used at a higher capacity, in a greater variety of ways and when performance can suddenly slow or cause a failure. Putting some thought into this up front, and it does not need to be more than being conscious of these areas as you are developing, can lead to you having a much happier client base in the long run.

I hope you enjoyed some of these additional details and the podcast episode with Xi. I would like to thanks Xi again for a great conversation and for having me on. If you would like to read more about Salesforce Performance, I wrote a blog a couple of years ago on “Salesforce Application Performance” based on a talk I gave at London’s Calling 2017.